Machines do not know how to decide

Algorithms governing artificial intelligence and robots must be subject to ethical and moral rules

BarcelonaMachines, although it may be hard to believe, are subject to moral and ethical principles. And the more sophisticated they are, which implies more degrees of freedom and more autonomy, the higher the ethical component. This is the case of robots and artificial intelligence. To a large extent, whether these principles are respected depends on their programming, that is, on the algorithms that are created so that the machines perform the function for which they are designed. In other words, human intervention in their conception. The study of these ethical rules and principles, which goes far beyond what Isaac Asimov defined in 1950 as the fact that in no way could a robot harm a human, is called roboethics. A discipline that is growing with each passing day and that has algorithm programming at its core.

Deciding in case of conflict

Ramón López de Mántaras, research professor at the CSIC and former director of the pioneering Artificial Intelligence Research Institute (IIIA), is one of the researchers who have most reflected on this subject in our country. "How do we teach an autonomous car whether to turn right or left in case of conflict?" he asks from the outset. For the expert, these types of vehicles are nothing more than "robots with wheels" governed by artificial intelligence. "We can teach them to respect traffic rules, but it's not clear that they know how to respond appropriately to an unforeseen incident". For example, when faced with a large puddle of water, where humans would intuitively brake, or the alternatives to an imminent collision. "Do you hit an animal or dodge another car? What if it's a person?" he says. He adds, "If you don't drive and don't have the ability to drive, who's responsible?"

This is one of the many ethically charged technical dilemmas that algorithm programmers often have to resolve. There are others that are equally, if not more, gnawing. One of the most relevant has to do with bias. "The programmer lives in a specific context, culture or circumstances", summarises López de Mántaras. Therefore, it is possible that he or she may introduce values that are not universal. This could be the case of parameters with a racist or gender bias or based on social prejudices. The result of this programming will include the bias. "It will not be the fault of the machine or the algorithm, but of the programmer", he says. "Some supposedly advanced programs label people of African origin as gorillas".

The problem is aggravated when the algorithm is in charge of selecting personnel, predicting a conviction or reviewing a sentence, as has already happened in different countries. Or in the choice of workers dismissed from a large company or, much more serious, if it is responsible for determining the target of autonomous lethal weapons, such as drones for military use.

Robots? With bodies, please

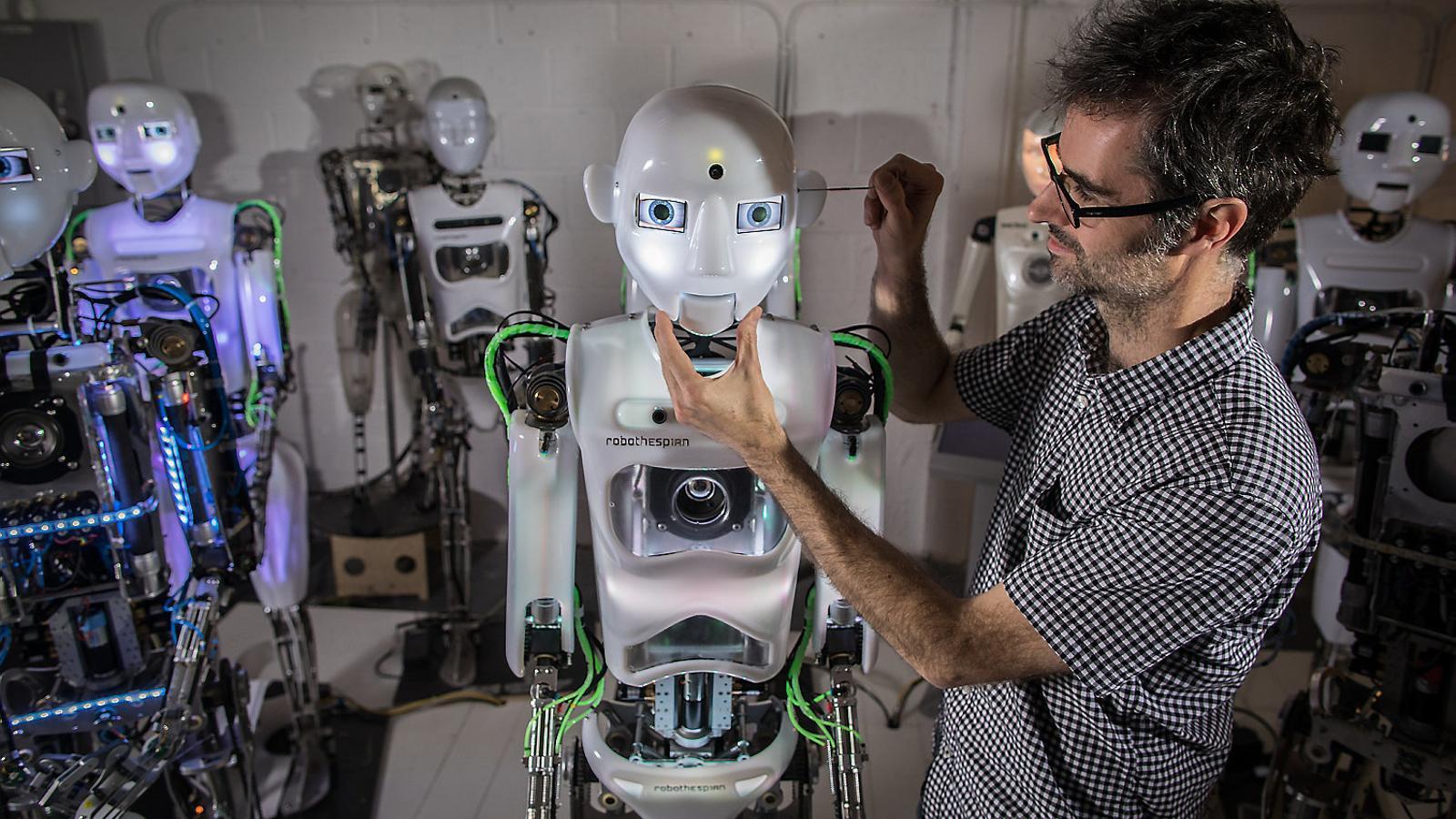

These computing devices and many others programmed with artificial intelligence tend to be called robots. Essentially, because they are automated, have a certain degree of autonomy and have the ability to improve their performance as they acquire knowledge. But not everyone agrees. Paul Verschure, ICREA professor at the Institute for Bioengineering of Catalonia (IBEC), argues that this term can be understood as a metaphor, but not as a reality. "A robot needs a body, whatever its shape and whatever the algorithms that govern its functionality", he says. This shape can be humanoid or, on the contrary, have nothing to do with it, as is the case with most industrial robots. Having a human form has a lot to do, according to Verschure, with such an essential capability as communication. "In certain circumstances, including in healthcare, humanoid form and movements facilitate human-machine interaction", he says. He believes this aspect can help establish trusting relationships, especially in elderly or cognitively impaired patients. But the ethical debate is open. Some believe that this can lead to feelings of addiction or substitution. Obviously, algorithms must take this limitation into account.

Be that as it may, and despite the fact that we tend to think of robots as tools that perform repetitive and complex tasks, dangerous or of an assistance nature, and whose first principle is not to harm humans, the reality is that we are still very far away, says Verschure, from them being a reliable reality. Probably, says López de Mántaras, more progress has been made in the generation of algorithms than in the ability to provide robots with autonomy. "Except for those that work in the automotive industry or companies with similar needs, the rest are very inefficient and their energy consumption is very high", says the IBEC expert. "Thinking about sophisticated and highly autonomous robots is still science fiction", he adds. A mechanical fantasy but a closer reality in terms of artificial intelligence and the generation of algorithms that help in decision-making and that tend to make them decide for us. But as López de Mántaras says, "machines do not know how to decide". That is why legal and ethical regulations are needed.