Every day ChatGPT consumes at least 100 million liters of fresh water.

A search on ChatGPT requires ten times more energy than the same search on Google.

GenevaJust a few days ago, Amazon announced plans to build a gigantic data center in the state of Indiana, United States. This computing center will be dedicated to training artificial intelligence systems with the goal of simulating the human brain. The electricity bill will be expensive, as this complex will consume approximately 2.2 gigawatts of electricity—enough to power an entire city.

The fact is that, in the last decade, data management and analysis have become a of the main consumers of energy and other resources on the planet. The sheer volume of information being processed and its complexity have been exacerbated by the emergence of artificial intelligence, largely due to the powerful language models behind systems like ChatGPT and Llama.

"The global impact is disproportionate. The problem is that we only know of a dozen models, but there are perhaps thousands being trained with the same data," says Ulises Cortés, director of artificial intelligence research at the Barcelona Supercomputing Center (BSC).

The exponential growth of AI-based technologies and the resulting increase in energy consumption are the main challenges facing not only the companies that produce these models but also the respective governments that must manage demand. Companies such as Facebook, Google, and Amazon are seeking strategies to reduce this impact, some of which involve installing large areas of photovoltaic panels or building small nuclear reactors near data centers. However, none of these appear to be a sustainable solution on a planet severely affected by rising temperatures and an ever-looming energy crisis.

But how much does AI cost?

The human brain, with all its complexity and computing power, consumes around 0.3 kilowatt hours, approximately 20% of the total energy our body needs to perform basic functions. This figure contrasts sharply with the consumption of the most powerful artificial computing tools in existence, which have ubiquitously entered our lives.

However, measuring the energy consumed by an AI model is not like calculating the gasoline consumed by a car or the electricity used by a refrigerator. There is no established methodology or database containing the necessary information. No one has been able to quantify it in detail. Furthermore, the lack of transparency among the developer companies also makes it difficult to obtain a clear figure.

Some calculations made by the Massachusetts Institute of Technology, The well-known MIT, estimate that training GPT-4, from the company OpenAI, cost more than 100 million dollars and consumed around 50 gigawatt hours of electricity, enough to power the entire population of Barcelona for a day and a half. However, this calculation is approximate, since models like GPT-4 or ChatGPT are "closed source," meaning no one other than the developers has access to the details of the model or its creation and training process. Therefore, without more information from the companies, it is impossible to nitpick. The most reliable way to make estimates is from "open source" models, such as the Chinese DeepSeek, and extrapolate their results.

The data problem

One of the factors that appears to have the greatest impact on consumption is the initial data processing. Most models, like ChatGPT, are trained on a very large volume of information found on the internet. Much of this information is redundant or useless, but filtering is complex and requires intensive human oversight, making it a costly process that companies often forgo.

"It's thought that up to 30% of energy expenditure is spent on poor training system design," Cortés points out. The only model that so far appears to have curated data before training is DeepSeek, the large AI model developed by China.

Looking to the future and taking into account the evolution of AI, according to some projections published by the Lawrence Berkeley National Laboratory, by 2028 more than half of the electricity consumed by data centers will be used for artificial intelligence. At this point, these tools will consume as much as 22% of the.

And not only electricity, but it also consumes a lot of water. A Cornell University study quantified each ChatGPT interaction between 10 and 40 prompts, in half a litre of water and warned that, although it might seem small on an individual level, this AI can handle between 100 and 200 million requests per day, for visits, which means 100 million litres of fresh water per day, a scarce resource to which a quarter of the world's population does not have access.

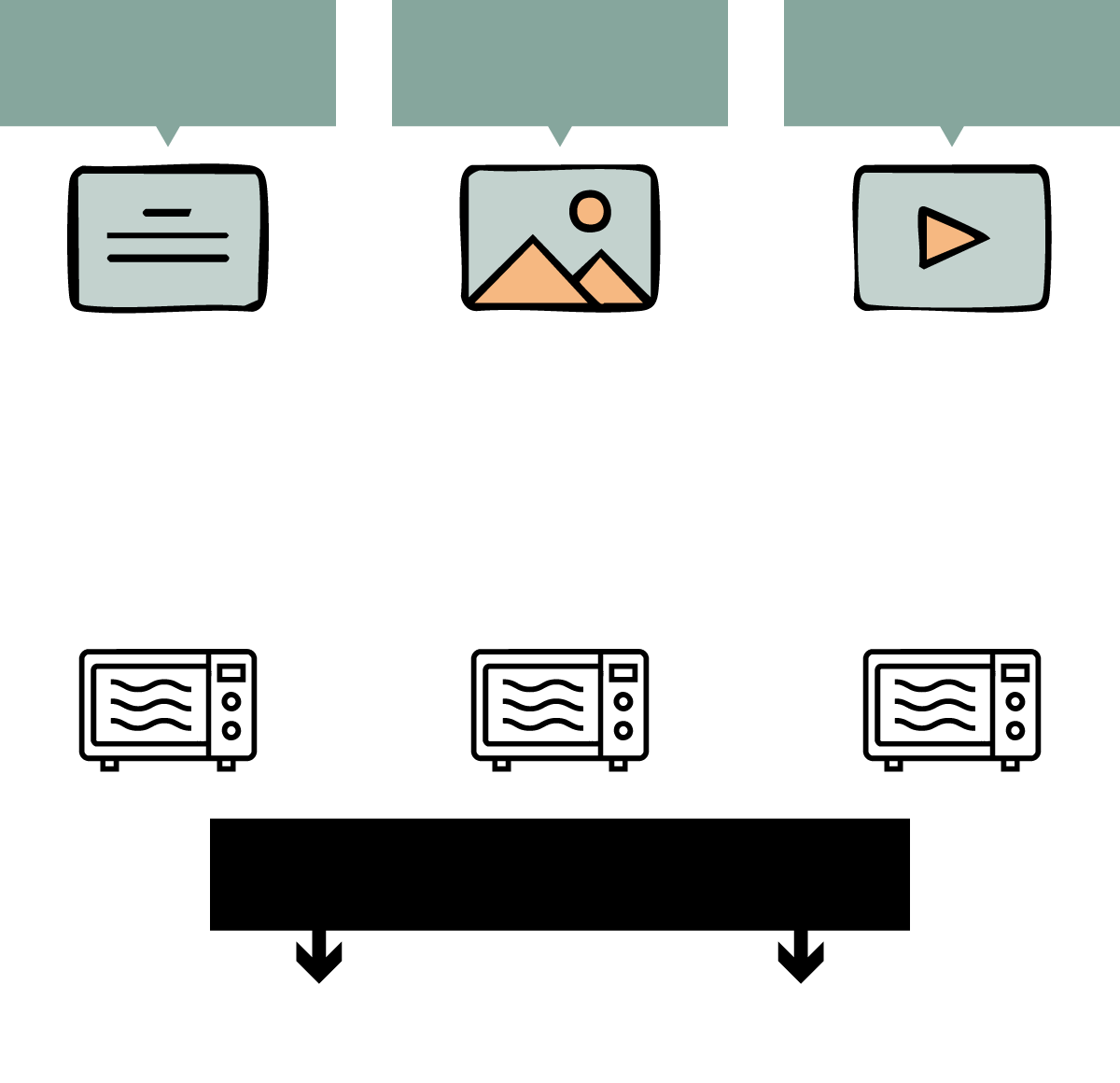

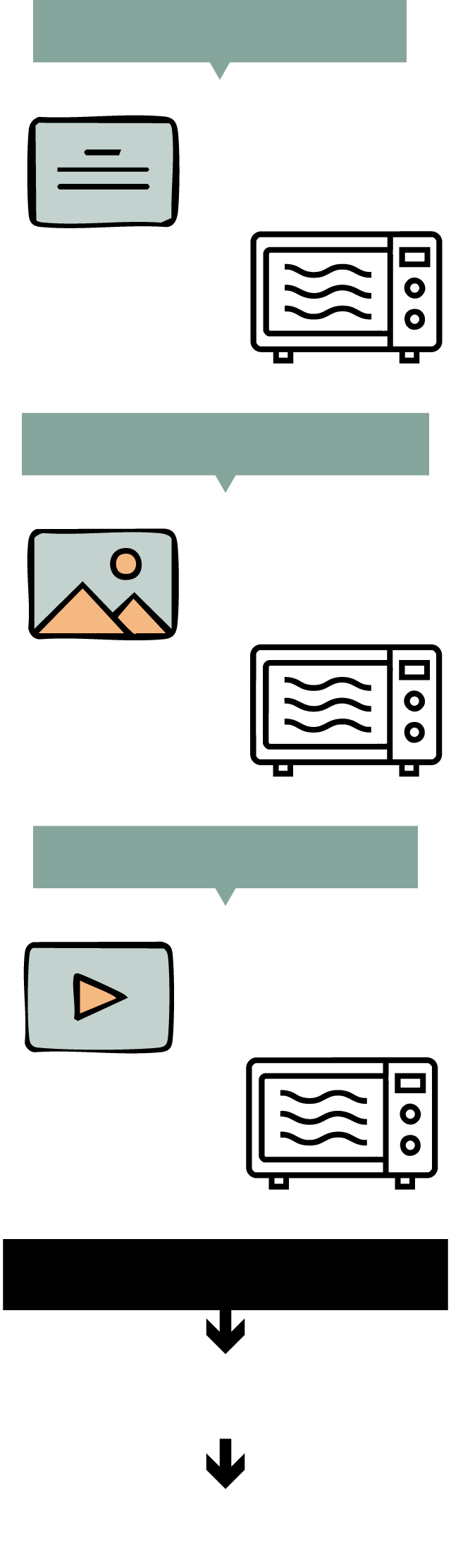

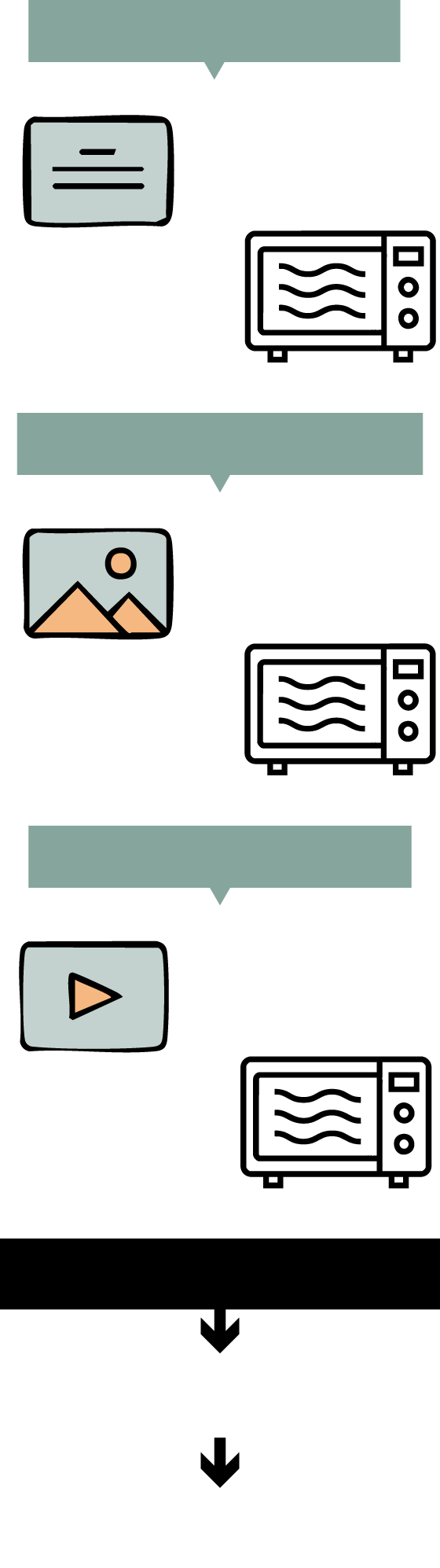

The cost of generating text, images and videos

Text generation is possibly one of the most common applications of large language models. In each "conversation," users typically string together several questions and answers. According to estimates by MIT Technology Review, the Llama 3.1 model, from Meta, Facebook's parent company, with 600 billion parameters, requires around 6,700 joules for each response—the energy needed to run a microwave for eight seconds. However, models like ChatGPT contain three times as many parameters and would triple the consumption of Meta's model.

Contrary to what one might think, generating images is much more economical. Because the architecture of image-generating models differs significantly from those used to generate text, they consume around 4,400 joules of energy for a standard-quality image, the equivalent of running a microwave for five and a half seconds.

Finally, when it comes to video generation, consumption skyrockets. Generating a video of just five seconds with 16 frames per second requires about 3.4 million joules, the equivalent of running a microwave for over an hour.

Some estimates put the global number of daily interactions with ChatGPT at around one billion, a figure which, according to ChatGPT (ironic), is equal to the number of microwaves in the world.

Although some AI models, such as the large language models behind ChatGPT, for example, consume large amounts of resources, other models are focused specifically on making many processes more efficient and using much less energy. This is the case, for example, with managing a building's temperature or predicting energy consumption in a given region. "When energy management becomes more complex, using these tools can help us find an optimal solution," says Joaquim Melendez Frigola, a professor at the University of Girona and an expert in systems engineering. "These AI models can help us with complex management problems by increasing the speed and complexity of defining the environment," he adds. They are based on much simpler models than large language models and are, consequently, much more economical.

The thirst for AI

AI isn't just hungry for electricity; it's also thirsty and drinks a lot of water. Much of this water is used to cool the electronics in data centers. Sam Altman, founder of OpenAI, the company behind ChatGPT, states that each question we ask in ChatGPT consumes only one-fifteenth of a tablespoon of water. However, this figure contrasts with what some people get. studies published in journals such as Nature, who estimate that consumption is more like thirty times the amount Altman claims; there are also estimates that go further and put it in the hundreds of liters.

To offset the high energy consumption of data centers, some large technology companies are investing in the development of power plants near their facilities. Among the alternatives are the installation of large areas of photovoltaic panels or even the development of new nuclear power plants. Just a few days ago, Google signed an agreement with Commonwealth Fusion Systems, a company that develops nuclear fusion technology. Founded in 2018 as a spin-off from MIT, this company counts among its investors Bill Gates himself, founder of Microsoft.

"Bill Gates saw this coming and has been investing in the development of micronuclear power plants for years," says Cortés. Along these lines, Gates founded TerraPower, a company developing a new generation of compact fusion reactors. Earlier this year, the company signed an agreement with Sabey Data Centers, one of the leading data center builders in the United States.

Towards a sustainable and responsible model

Artificial intelligence is advancing at a breakneck pace, and its development and adoption are largely unregulated. Large technology companies seem to enjoy absolute freedom when it comes to making intensive use of resources essential to society, such as electricity and water.

"It's surprising that governments have allowed themselves to be lured into a technology that is potentially so harmful, not only because of the environment, but also because of education and critical thinking," says Cortés, who proposes including environmental taxes similar to those used for combustion vehicles. "Why does everyone have access to these types of tools and charge an environmental tax for their use? Should users be aware of the impact of using this technology?" he asks.

The BSC's director of AI research is critical but optimistic about the sector's future. "Climate change is accelerating precisely because of the careless use of technology. We hope to educate new generations to understand that their future depends on its responsible use," he concludes.